Forest-of-Thought - an emerging paradigm for advanced LLM reasoning

FoT mimics human-like reasoning by implementing selective attention, adaptive learning and collaborative decision-making via sparse activation of thoughts, dynamic self-correction & consensus-driven outputs 🧠

In this article we will discuss test-time compute and an overview of the key components of the Forest-of-Thought approach.

From Chain-of-Thought to Forest-of-Thought: Scaling Test-Time Compute for Advanced LLM Reasoning

Let's take a deep dive into a new paradigm of reasoning in Large Language Model (LLM) inference and reasoning strategies. If you’ve followed the evolution of LLM prompting paradigms, you’ve probably heard about Chain-of-Thought (CoT) prompting. This method involves explicitly showing the model intermediate reasoning steps as it solves a problem. Yet as tasks become more complex and the stakes of correctness rise—think finance, medicine, physics, and intricate logical puzzles—researchers have looked for even more powerful methods.

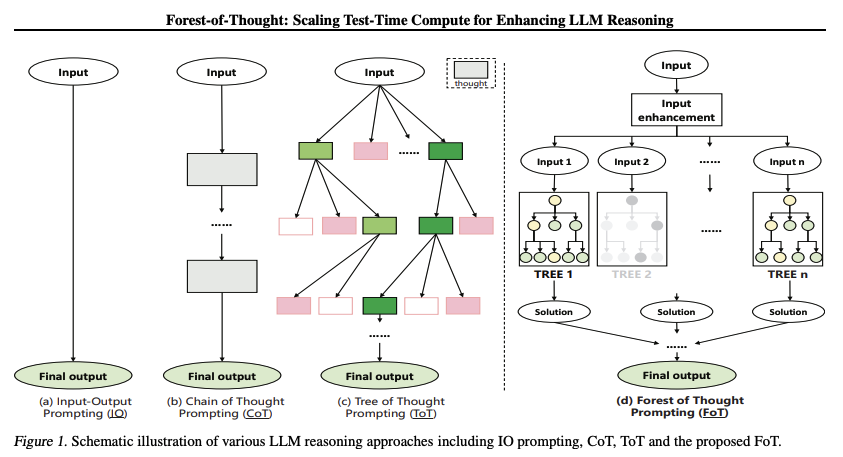

We’ve seen approaches that use additional compute time at inference - commonly referred to as "test-time compute" or "inference-time compute" - to scale the complexity and depth of reasoning. We’ve also seen developments like Tree-of-Thought (ToT) prompting, where reasoning chains branch out into a tree structure, allowing the model to explore multiple solution paths in parallel and then select the most promising one. But this approach, while useful, can plateau in performance as complexity increases.

Now, a new strategy enters the scene: the Forest-of-Thought (FoT) prompting methodology. Instead of relying on a single tree structure, multiple reasoning tree are constructed into a “forest” of reasoning paths—each of which may represent a unique perspective, domain expertise, or approach. By running these trees in parallel, dynamically activating only the most promising branches, and then combining their results via consensus mechanisms, we can push beyond the performance plateaus of simpler methods.

In this post, we’ll break down the concepts behind test-time compute, the difference between test-time compute and test-time training and how Forest-of-Thought prompting brings an exciting, human-like approach to large-scale reasoning. We’ll also touch on related techniques like Monte Carlo Tree Search (MCTS) refinements and how these tie into the broader vision of pushing LLM reasoning capabilities to new heights.

Test-Time Compute vs. Test-Time Training

Before we jump straight into the forest, let’s clarify a few fundamental concepts.

Test-Time Compute: This refers to allocating additional computational resources during the inference phase of an LLM—when the model is actually generating an answer to a user query. Instead of spitting out a quick answer at maximum token generation speed, the model takes more time (maybe one, two, or even five minutes) to deeply reason about the problem. During this process, it might generate multiple candidate solutions, refine them iteratively, run error checks, and then produce a final, more accurate result. Crucially, test-time compute does not alter the model’s parameters; it’s just giving the model more “thinking time” and possibly more parallel computation (like using more GPUs or distributed nodes) to explore broader reasoning paths.

Test-Time Training: By contrast, test-time training involves temporarily updating the model’s parameters during the inference stage. Imagine you have an LLM that’s well-trained but suddenly encounters a data distribution or problem domain it has never seen before. With this approach, you can quickly fine-tune the model’s weights on a few synthetic or domain-specific examples—right then and there—so that it adapts to this new scenario. Test-time training allows the model to become more specialized on the spot, but it’s conceptually different from test-time compute. While test-time compute is about more thinking time with fixed knowledge, test-time training is about updating and adapting the model’s internal knowledge at inference time.

Beyond Chains and Trees: Enter the Forest-of-Thought

Chain-of-Thought (CoT) prompting was a breakthrough approach: guiding the model to show its reasoning steps leads to more interpretable and often more accurate final answers. Tree-of-Thought (ToT) prompting extended this idea by branching the reasoning chain into multiple pathways, allowing the model to explore various solution paths. If the model got stuck or made a subtle error in one branch, another branch might still lead to the correct solution. However, as research teams discovered, scaling ToT by increasing the number of branches eventually hits a performance plateau. No matter how large the tree grows, improvements flatten out.

Forest-of-Thought (FoT) takes the next logical leap. Instead of just one big reasoning tree, you run multiple trees in parallel. Think of each tree as a separate expert or perspective. One tree might reason from a physics standpoint, another from a mathematical angle, and another from a financial or medical perspective. By diversifying the initial conditions and reasoning frameworks of each tree, we create a forest of expertise. After these trees grow and prune their internal branches, we bring them together in a final step, where all “experts” vote on the best solution. The intuition is similar to assembling a panel of specialists to solve a tough problem—more heads are better than one, especially if they bring different insights.

Key Components of the Forest-of-Thought Approach

The Forest-of-Thought framework combines three main pillars to achieve superior reasoning performance:

-

Sparse Activation of Reasoning Paths

In a large forest, not all paths are equally valuable. Sparse activation ensures that only the most promising branches receive further computational attention. This mirrors human cognitive efficiency—when problem-solving under time pressure, humans focus on the most relevant aspects. The model employs a scoring function (which could be as simple as comparing semantic similarity or as complex as a domain-specific correctness metric) to decide which nodes and branches to explore further. By pruning unproductive reasoning early, FoT saves time and compute while zeroing in on the best solutions. -

Dynamic Self-Correction

One major challenge with large reasoning structures is the risk of error propagation. A single mistake early in a reasoning chain can cascade into nonsense in later steps. FoT tackles this head-on with dynamic self-correction. As the LLM explores reasoning paths, it periodically evaluates them for correctness. If a path’s score falls below a certain threshold, the system flags it for revision. This might involve calling a specialized mini-model (like a math expert) or simply revisiting the logic to correct errors. Over time, this prevents small mistakes from snowballing into complete solution failures. -

Consensus-Guided Decision-Making

After generating a multitude of reasoning paths across several trees, FoT uses a consensus mechanism to produce a final answer. Subtrees vote on the most promising answers, and a majority vote can yield a final solution. For even more nuanced scenarios, a domain expert model—perhaps one specialized in medical reasoning—can break ties or evaluate subtle differences between candidate solutions. This consensus phase mimics human committees where collective intelligence often outperforms individual reasoning.

Why Forest-of-Thought Matters

The FoT methodology holds significant promise for complex, domain-intensive tasks. Consider advanced mathematics, intricate logic puzzles, or highly specialized medical diagnostics. Traditional CoT might provide a good first guess, and ToT improves upon that, but only to a point. FoT leverages a diversity of reasoning paths, dynamic correction, and collaborative decision-making to surpass previous accuracy plateaus.

In experiments discussed in recent literature, researchers found that scaling from a single tree to multiple trees yields substantial gains in problem-solving accuracy. By carefully pruning weak nodes, dynamically correcting errors, and integrating the wisdom of multiple “expert” trees, FoT can achieve near-perfect accuracy on certain specialized tasks.

Moreover, FoT is conceptually aligned with human problem-solving. Humans often break down complex problems by consulting multiple experts (like assembling a panel of specialists), focusing attention on critical subproblems, and repeatedly revising hypotheses. FoT’s sparse activation, self-correction, and consensus mirror these human cognitive strategies, offering a more natural and interpretable approach to LLM-based reasoning.

Differentiating from Test-Time Training

It’s important to remember that FoT is about test-time compute, not test-time training. In FoT, we are not updating the model’s internal parameters; we are only applying more computational effort, complexity, and time at inference. The model’s “knowledge” remains the same. By contrast, test-time training would involve on-the-fly parameter updates, a kind of real-time adaptation to new or out-of-distribution tasks. Both are valuable tools in the LLM toolbox, but they serve different purposes. Test-time compute (FoT) refines reasoning by exploring multiple solution paths. Test-time training updates what the model “knows” to better handle unfamiliar distributions.

Related Work: Monte Carlo Tree Search & Self-Refinement

In parallel to the FoT movement, there are other exciting developments in advanced reasoning. For example, Monte Carlo Tree Search (MCTS) has been explored as a way to systematically search through possible solutions. Combining FoT with MCTS enhancements—such as self-refinement—further improves accuracy.

Self-refinement, borrowed from the world of decision-making algorithms, involves iterative checks and feedback loops. The model doesn’t just solve once; it solves, evaluates, refines, and tries again. Incorporating these refinements into MCTS can push performance even higher, approaching 100% accuracy on challenging benchmarks. Thus, we see a continuum of innovation: from CoT to ToT, from ToT to FoT, and from FoT to even more sophisticated frameworks involving MCTS and self-refinement.

Practical Considerations and Trade-Offs

Of course, these improvements come at a cost. Running multiple trees in parallel, activating sparse nodes, performing dynamic corrections, and reaching consensus can be computationally expensive. If you have a time-critical task—like responding to a live query in a few seconds—this method might be too slow or resource-intensive. However, if you can afford more time (maybe minutes) and have access to significant computational resources, FoT can deliver substantially higher reasoning accuracy and robustness.

For certain domains—like academic research, strategic planning, complex engineering tasks, or critical medical decision support—the payoff might justify the added complexity and compute resources. For everyday tasks, simpler CoT or ToT strategies might suffice. It’s all about choosing the right tool for the job.

Summary of Forest-of-Thought at a Glance

- Concept: Forest-of-Thought prompting applies multiple reasoning trees in parallel, each representing different perspectives or initial conditions.

- Sparse Activation: Only the most relevant reasoning paths are pursued, saving compute and time.

- Dynamic Self-Correction: Real-time identification and correction of errors prevents them from snowballing.

- Consensus-Based Decision: Subtrees vote on solutions; if a tie occurs, an expert model can resolve it.

- Human-Like Reasoning: FoT mimics how humans solve complex problems by tapping into multiple experts, focusing attention where it matters, and learning from mistakes.

- Test-Time Compute, Not Training: FoT does not alter the model’s parameters—just uses more inference-time reasoning. It’s distinct from test-time training, which adapts the model to new distributions by updating weights.

- Future Directions: Combining FoT with techniques like Monte Carlo tree search and iterative self-refinement further improves performance, scaling closer to optimal solutions.

Looking Ahead

If you found the progression from Chain-of-Thought to Tree-of-Thought and now to Forest-of-Thought exciting, you’re not alone! This field is rapidly evolving, and new research continues to push the boundaries. By combining these prompting strategies with improved scoring functions, domain experts, dynamic correction and consensus-building, researchers are forging a path toward LLMs that reason more like humans.