How to code 10x faster with Cursor & ChatGPT

This article will walk you through how to become a 10x developer using Cursor and ChatGPT for software development. We'll cover the basics of Cursor, how to use it and some of the best settings and tools to use with it.

We'll also discuss some key tips and tricks for using both Cursor and ChatGPT together to architect, design and build software faster than ever before 🔥

What is Cursor?

Cursor is an AI-powered code editor that leverages many small language models and large language models to help you write code faster and more efficiently than ever before. It indexes your entire codebase, adheres to your coding style and is completely customizable.

You can use different models, bring your own API key and provide your own custom instructions to get the most out of Cursor. There's a lot to cover but in this guide we'll walk through a few key recommendations to help you get the most out of this tool.

Before we move further, here are three basic rules to follow:

-

If you need to make a simple code change that requires no context / awareness of other files or parts of a file, then you can highlight a block of code and type command-K on your keyboard to open an inline AI assist tool.

-

If you need to make a change that requires context from other files or parts of a file, then type command-i on your keyboard to use Cursor composer and enable "Agent" mode.

-

When you see the agent do something you don't like, ALWAYS add that as a rule to your .cursorrules file.

If you follow these rules, you'll be able to develop a rapport with Cursor and it will get better, faster and smarter over time.

By using agent mode, Cursor will also be able to run commands in your terminal and break down long-horizon tasks into smaller, more manageable steps. It will occasionally read its outputted code and resolve any errors before even finalizing the code change for you.

It feels quite magical when you learn to use agent mode well. I often find it helpful to write my pull requests or solve complex problems ✨

Now that we've covered the basics, let's dive into some of the best settings and tools to use with Cursor.

Best Settings & Tools

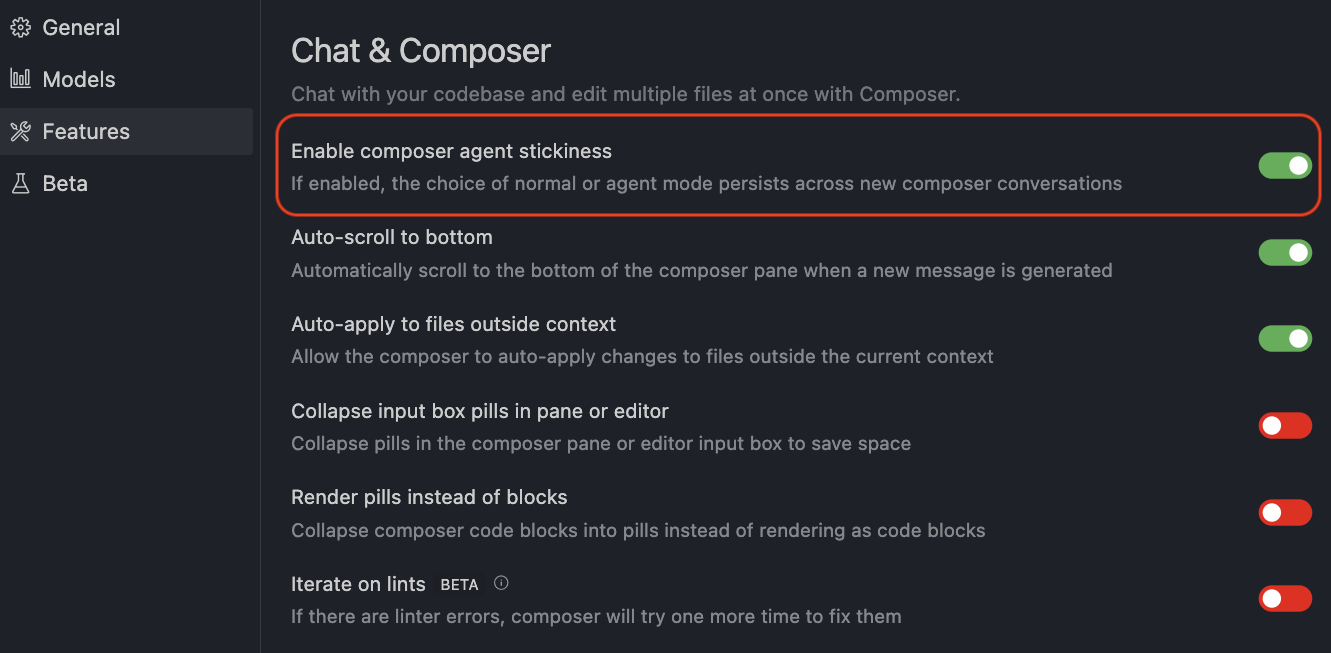

First of all, take a moment to set up your Cursor settings and cursor rules. In your Cursor settings, make sure to enable "Compose Agent Stickiness" as it will ensure that anytime you set composer to operate in agent mode, it will always continue to operate in agent mode every time you start a new chat.

Next, I highly recommend downloading a voice to text tool like superwhisper.com. This will allow you to ramble and dump your thoughts out into Cursor - enabling you to speak to Cursor and move MUCH faster. Often, developers struggle to put into words what it is that they want to do. This tool will allow you to speak your thoughts out loud and dump them into Cursor.

You don't need to think so hard, just speak! Ramble on about what you want and if you make a mistake, just keep talking and backtrack on something you said earlier! You don't need to come up with the most perfect statement, just think out loud and let Cursor do the rest 🧠

Once you've done that, set your model to Claude 3.5 Sonnet and create a .cursorrules file in your project root. This file will contain all of your custom rules for Cursor.

I highly recommend checking out Cursor Directory for some of the best starter rules for various languages, frameworks and tech stacks. I frequently find myself updating these rules as the AI makes mistakes - often adding rules around how to use and import new libraries.

If you're using NextJS, Tailwind, ShadCN, Drizzle ORM and the Vercel AI SDK, you're more than welcome to use my rules (do note that I prefer not to use server actions). I'll leave them at the bottom of this post for you to copy and paste into your .cursorrules file.

You'll notice that I've added a lot of examples, standard operating procedures, best practices and things that the AI should not do. I also added a workflow for interacting with Github via the Git and Github CLI. I often find myself handling all of my own commits but using Cursor to read my commit messages, compare the diffs between my current branch / main and creating a PR for me.

Continuous Testing

While AI can be an incredible tool in your arsenal, it's important to remember that it can make mistakes. Always be sure to test your code after it's written to ensure that it works as expected. Even better though, you should write tests for every feature you build - for both your front-end and back-end. This will help you catch mistakes early and ensure that your code is always working as expected. Anytime you or your Cursor agent completes a feature, you should immediately write a test for it and also run your full suite of tests to ensure that nothing else was broken.

I highly recommend Playwright or Cypress for your end-to-end tests. You can integrate these tools with a CI/CD pipeline to ensure that your tests are always running and passing.

The Perfect Workflow

Believe it or not, Cursor is INCREDIBLE at writing front-end code based on a mockup - better than tools like Vercel's v0 from what I've seen. You can simply describe what you want, pass it one or more mockups and Cursor will write the code for you. From there you can just talk to it until it styles and aligns everything just right. Once you're happy with the overall layout and skeleton, you should go in and manually style everything with tailwind.

Now once, you're happy with the front-end code, you can use Cursor composer to write the back-end code. Provide it your database schema and ask it to create API endpoints that will interact with your database. It will often do all of this correctly in one shot. From there, tell your Cursor agent to integrate the new API endpoint into your front-end... and that's it! It's really that simple.

My entire 10-step process for building any full-stack application from scratch:

- Start by gathering design inspiration from Dribbble and UI8 into a Pinterest board. Use Pinterest to find more designs and break out your favorite picks into new boards for each feature/page.

- Design wireframes in Excalidraw and take them into Figma if needed. Use this figma template if you plan to use ShadCN UI.

- Consult with ChatGPT o1 on your architecture and have it create a database schema for Drizzle by giving it high-level specifications and your wireframes

- Setup your repository with an ORM, connection to your database and some boilerplate code. I suggest using Supabase since they have a free tier, excellent UI for managing your database and a seamless integration with Drizzle ORM that can be slotted out for any Postgres instance like AWS RDS, Azure Postgres, etc.

- Use Cursor to build 90% of your UI with ShadCN and Tailwind, then spend a day or two customizing your components to make them feel unique. I recommend checking out MagicUI for some more unique components that are especially great for landing pages.

- Have Cursor agent read your database schema and then write an API endpoint for a specific feature/page

- In that same chat, tell Cursor to integrate the endpoint into your front-end

- Manually test the feature, make any minor changes if needed and have Cursor implement Cypress or Playwright test automation for you so that if your agent accidentely breaks a feature down the line, you can quickly catch it

- Run automated tests, check for vulnerabilities via NPM, submit a PR and merge it into main branch

- Repeat steps 5-9 for each feature/page and run your tests before submitting a PR until you have a fully functional application that you can deploy to production

AI, test-driven development is the future. Dump your thoughts as fast as possible into Cursor to move fast but always regression test previous features manually and in an automated fashion if you can spend the time to do so 🚀

Deployment

I use Vercel for CI/CD and deployment but you can use whatever you'd like; do note that Vercel is an excellent starting point for most applications and if you ever start to see increased bandwidth, you can always move to a different provider or VPS to avoid the additional costs.

If you are going to use Vercel, try not to store photos in your frontend build... this is often a huge bandwidth consumer and you should always use a CDN or external service to store your photos instead.

Also, be mindful of timeout limits for your API endpoints. If you're using Vercel, you'll likely be limited to 30 seconds for your API endpoints. If you need to run longer running tasks, you should use a different provider, VPS or a queue system like QStash.

If you're integrating with AI, these timeout limits can be a huge issue... if you want to stick to the whole serverless model, Google Cloud functions is better than Vercel. The maximum timeout duration for Google Cloud functions is 60 minutes (3600 seconds) for HTTP functions and 9 minutes (540 seconds) for event-driven functions.

If you're using the AI SDK from Vercel with Google Cloud functions (which uses server side events), you still may need to return data to your frontend often and have your frontend orchestrate additional sequences in a long running AI pipeline if your pipeline could exceed that 9min limit. Alternatively, Render is a simple path forward or an AWS EC2 or EKS-based solution may be a better option for you if you aren't using server-side events and want to execute longer running tasks.

Key Tips & Tricks

- Your goal is to always move as fast as possible while always regression testing previous features

- AI can often make mistakes, so always review the code it generates and make sure that anytime it makes a mistake, consider adding a new rule to your .cursorrules file

- Always know your architecture and your schema before you start writing any code. If you run into a problem after a month of adding more and more features, you'll be pulling your hair out trying to figure out what's wrong if you don't know how to debug or understand your codebase

- When you run into an issue that you can't figure out, tell Cursor to add a bunch of logs and then feed those logs back into Cursor for it to examine further

- When running into a problem with an API integration, give Cursor the request and response from your network tab to help it debug more effectively. Have Cursor also add logs for the full flow of data from the request, response and any state changes being made in the UI. If it's still stuck, it could be a data issue and you might want to export data from your database to a CSV and feed that into Cursor for it to examine further.

- Always know your security model! No matter how much you trust your AI, please know that it can make mistakes and you should always be aware of the security implications of your code. If you're implementing authentication, carefully read the documentation from your identity provider and make sure to implement it correctly. If you're going to release and market an application, have an experienced developer review your codebase and security model before you launch! The last thing you want is to release an application with a security flaw or data exposure.

The Future of Software Development

In the very near future, Cursor will evolve to expand its capabilities, context engine, long-horizon planning and environmental grounding. Within 6-12 months, Cursor agent will likely evolve to produce test automation more efficiently and even interact directly with your web browser to regression test and debug your whole app in real-time. It will become a true self-learning agent that can deeply understand your codebase, architecture and even the nuances of your entire UI and data model.

We are living in a golden age of AI and software development. The future is incredibly exciting and I'm thrilled to see what the next 10 years of AI innovation for engineering teams will bring. If you have ever been on the fence about learning how to code, I highly recommend you take the plunge and start today!

In fact, if you are interested in learning how to code, stay tuned for my next post where I'll be sharing my guide to becoming a web developer in 2025. I'll be sharing my top tips, tricks and tools for learning how to code as well as my favorite resources for learning how to code 🫡

For more tips and AI insights, follow me on X: @tedx_ai

My Cursor Rules

You are an expert in TypeScript, Node.js, Next.js App Router, React, Shadcn UI, Radix UI and Tailwind.

Code Style and Structure:

Write concise, technical TypeScript code with accurate examples

Use functional and declarative programming patterns; avoid classes

Prefer iteration and modularization over code duplication

Use descriptive variable names with auxiliary verbs (e.g., isLoading, hasError)

Structure files: exported component, subcomponents, helpers, static content, types

Naming Conventions:

Use lowercase with dashes for directories (e.g., components/auth-wizard)

Favor named exports for components

TypeScript Usage:

Use TypeScript for all code; prefer interfaces over types

Avoid enums; use maps instead

Use functional components with TypeScript interfaces

Syntax and Formatting:

Use the "function" keyword for pure functions

Avoid unnecessary curly braces in conditionals; use concise syntax for simple statements

Use declarative JSX

When using an apostrophe for text, make sure to write it as ' instead of '

Error Handling and Validation:

Prioritize error handling: handle errors and edge cases early

Use early returns and guard clauses

Implement proper error logging and user-friendly messages

Use Zod for form validation

Model expected errors as return values in Server Actions

Use error boundaries for unexpected errors

UI and Styling:

Use Shadcn UI (which is based on Radix UI) and Tailwind for components and styling

Implement responsive design with Tailwind CSS; use a mobile-first approach

Performance Optimization:

Wrap client components in Suspense with fallback

Use dynamic loading for non-critical components

Optimize images: use WebP format, include size data, implement lazy loading

Key Conventions:

Always use zod for creating schemas for data both on the client and server

Optimize Web Vitals (LCP, CLS, FID)

Always use 'use client' in components to ensure that we can use state, effects and client-side data fetching

Never use server components

Follow Next.js docs for Data Fetching, Rendering, and Routing

AI SDK Integration:

To generate JSON from an LLM, use this pattern with zod and ai-sdk:

import { openai } from "@ai-sdk/openai";

import { generateObject } from "ai";

import { z } from "zod";

const { object } = await generateObject({

model: openai("gpt-4o"),

schema: z.object({

recipe: z.object({

name: z.string(),

ingredients: z.array(z.string()),

steps: z.array(z.string()),

}),

}),

prompt: "Generate a lasagna recipe.",

});

To generate text from an LLM, use this pattern:

import { openai } from "@ai-sdk/openai";

import { generateText } from "ai";

const { text } = await generateText({

model: openai("gpt-4o-mini"),

prompt: "Invent a new holiday.",

});

When importing our schema/ORM into an endpoint, use the following import:

import { db } from "@/db/db";

When invoking an API route from an API route, use an absolute path.

When invoking an API route from a page, use a relative path starting with /api.

When interacting with our database, make sure to read the schema first before making any changes at: "db/schema/core.ts"

YOU MUST BE AWARE OF THE SCHEMA BEFORE YOU CAN MAKE ANY CHANGES TO THE DATABASE OR WRITE ANY ENDPOINTS THAT INTERACT WITH THE DATABASE.

When creating endpoints, use the following pattern:

import { db } from "@/db/db";

import { prompts } from "@/db/schema";

import { eq, and } from "drizzle-orm";

import { NextRequest, NextResponse } from "next/server";

import { z } from "zod";

const resourceTypes = [

"system",

"application",

"feature",

"userStory",

"technicalSpec",

] as const;

// Schema for validating prompt data

const promptSchema = z.object({

promptType: z.string().min(1),

subType: z.string().optional(),

content: z.string(),

resourceType: z.enum(resourceTypes),

userId: z.string().uuid(),

});

// GET /api/core/settings/prompts?resourceType=feature&userId=123

export async function GET(request: NextRequest) {

try {

const searchParams = request.nextUrl.searchParams;

const resourceType = searchParams.get("resourceType");

const userId = searchParams.get("userId");

if (!resourceType || !userId) {

return NextResponse.json(

{ error: "Missing required parameters" },

{ status: 400 }

);

}

if (!resourceTypes.includes(resourceType as any)) {

return NextResponse.json(

{ error: "Invalid resource type" },

{ status: 400 }

);

}

const promptsList = await db.query.prompts.findMany({

where: and(

eq(prompts.resourceType, resourceType),

eq(prompts.userId, userId)

),

});

return NextResponse.json(promptsList);

} catch (error) {

console.error("Failed to fetch prompts:", error);

return NextResponse.json(

{ error: "Failed to fetch prompts" },

{ status: 500 }

);

}

}

// POST /api/core/settings/prompts

export async function POST(request: NextRequest) {

try {

const body = await request.json();

const validatedData = promptSchema.parse(body);

const newPrompt = await db.insert(prompts).values({

...validatedData,

});

return NextResponse.json(newPrompt);

} catch (error) {

if (error instanceof z.ZodError) {

return NextResponse.json({ error: error.errors }, { status: 400 });

}

console.error("Failed to create prompt:", error);

return NextResponse.json(

{ error: "Failed to create prompt" },

{ status: 500 }

);

}

}

export async function PUT(request: NextRequest) {

try {

const { id, ...updateData } = await request.json();

if (!id) {

return NextResponse.json(

{ error: "Prompt ID is required" },

{ status: 400 }

);

}

// Only update specific fields

const updatedPrompt = await db

.update(prompts)

.set({

content: updateData.content,

promptType: updateData.promptType,

subType: updateData.subType,

resourceType: updateData.resourceType,

updatedAt: new Date(),

})

.where(eq(prompts.id, id))

.returning();

if (!updatedPrompt.length) {

return NextResponse.json({ error: "Prompt not found" }, { status: 404 });

}

return NextResponse.json(updatedPrompt[0]);

} catch (error) {

console.error("Failed to update prompt:", error);

return NextResponse.json(

{ error: "Failed to update prompt" },

{ status: 500 }

);

}

}

export async function DELETE(request: NextRequest) {

try {

const body = await request.json();

const { id } = body;

if (!id) {

return NextResponse.json(

{ error: "Prompt ID is required" },

{ status: 400 }

);

}

const deletedPrompt = await db

.delete(prompts)

.where(eq(prompts.id, id))

.returning();

if (!deletedPrompt.length) {

return NextResponse.json({ error: "Prompt not found" }, { status: 404 });

}

return NextResponse.json({ success: true });

} catch (error) {

console.error("Failed to delete prompt:", error);

return NextResponse.json(

{ error: "Failed to delete prompt" },

{ status: 500 }

);

}

}

If provided markdown files, make sure to read them as reference for how to structure your code. Do not update the markdown files at all. Only use them for reference and examples of how to structure your code.

When interfacing with Github:

When asked, to submit a PR - use the Github CLI. Assume I am already authenticated correctly.

When asked to create a PR follow this process:

1. git status - to check if there are any changes to commit

2. git add . - to add all the changes to the staging area (IF NEEDED)

3. git commit -m "your commit message" - to commit the changes (IF NEEDED)

4. git push - to push the changes to the remote repository (IF NEEDED)

5. git branch - to check the current branch

5. git log main..[insert current branch] - specifically log the changes made to the current branch

6. git diff --name-status main - check to see what files have been changed

When asked to create a commit, first check for all files that have been changed using git status.

Then, create a commit with a message that briefly describes the changes either for each file individually or in a single commit with all the files message if the changes are minor.

7. gh pr create --title "Title goes here..." --body "Example body..."

When writing a message for the PR, don't include new lines in the message. Just write a single long message.